Content from Welcome to Python

Last updated on 2023-04-18 | Edit this page

Overview

Questions

- How can I run Python programs?

Objectives

- Launch the JupyterLab server.

- Create a new Python script.

- Create a Jupyter notebook.

- Shutdown the JupyterLab server.

- Understand the difference between a Python script and a Jupyter notebook.

- Create Markdown cells in a notebook.

- Create and run Python cells in a notebook.

Key Points

- Python scripts are plain text files.

- Use the Jupyter Notebook for editing and running Python.

- The Notebook has Command and Edit modes.

- Use the keyboard and mouse to select and edit cells.

- The Notebook will turn Markdown into pretty-printed documentation.

- Markdown does most of what HTML does.

Many software developers will often use an integrated development environment (IDE) or a text editor to create and edit their Python programs which can be executed through the IDE or command line directly. While this is a common approach, we are going to use the [Jupyter Notebook][jupyter] via JupyterLab for the remainder of this workshop.

This has several advantages: * You can easily type, edit, and copy and paste blocks of code. * Tab complete allows you to easily access the names of things you are using and learn more about them. * It allows you to annotate your code with links, different sized text, bullets, etc. to make it more accessible to you and your collaborators. * It allows you to display figures next to the code that produces them to tell a complete story of the analysis.

Each notebook contains one or more cells that contain code, text, or images.

Scripts vs. Notebooks vs. Programs

A notebook, as described above, is a combination of text, figures,

results, and code in a single document. In contrast a script is a file

containing only code (and comments). A python script has the extension

.py, while a python notebook has the extension

.ipynb. Python notebooks are built ontop of Python and are

not considered a part of the Python language, but an extension to it.

Cells in a notebook can be run interactively while a script is run all

at once, typically by calling it at the command line.

A program is a set of machine instructions which are executed together. We can think about both Python scripts and notebooks as being programs. It should be noted, however, that in computer science the terms programming and scripting are defined more formally, and no Python code would be considered a program under these definitions.

Getting Started with JupyterLab

JupyterLab is an application with a web-based user interface from [Project Jupyter][jupyter] that enables one to work with documents and activities such as Jupyter notebooks, text editors, terminals, and even custom components in a flexible, integrated, and extensible manner. JupyterLab requires a reasonably up-to-date browser (ideally a current version of Chrome, Safari, or Firefox); Internet Explorer versions 9 and below are not supported.

JupyterLab is included as part of the Anaconda Python distribution. If you have not already installed the Anaconda Python distribution, see the setup instructions for installation instructions.

Even though JupyterLab is a web-based application, JupyterLab runs locally on your machine and does not require an internet connection. * The JupyterLab server sends messages to your web browser. * The JupyterLab server does the work and the web browser renders the result. * You will type code into the browser and see the result when the web page talks to the JupyterLab server.

JupyterLab? What about Jupyter notebooks?

JupyterLab is the next stage in the evolution of the Jupyter Notebook. If you have prior experience working with Jupyter notebooks, then you will have a good idea of what to expect from JupyterLab.

Experienced users of Jupyter notebooks interested in a more detailed discussion of the similarities and differences between the JupyterLab and Jupyter notebook user interfaces can find more information in the JupyterLab user interface documentation.

Starting JupyterLab

You can start the JupyterLab server through the command line or

through an application called Anaconda Navigator. Anaconda

Navigator is included as part of the Anaconda Python distribution.

macOS - Command Line

To start the JupyterLab server you will need to access the command line through the Terminal. There are two ways to open Terminal on Mac.

- In your Applications folder, open Utilities and double-click on Terminal

- Press Command + spacebar to launch Spotlight.

Type

Terminaland then double-click the search result or hit Enter

After you have launched Terminal, type the command to launch the JupyterLab server.

Windows Users - Command Line

To start the JupyterLab server you will need to access the Anaconda Prompt.

Press Windows Logo Key and search for

Anaconda Prompt, click the result or press enter.

After you have launched the Anaconda Prompt, type the command:

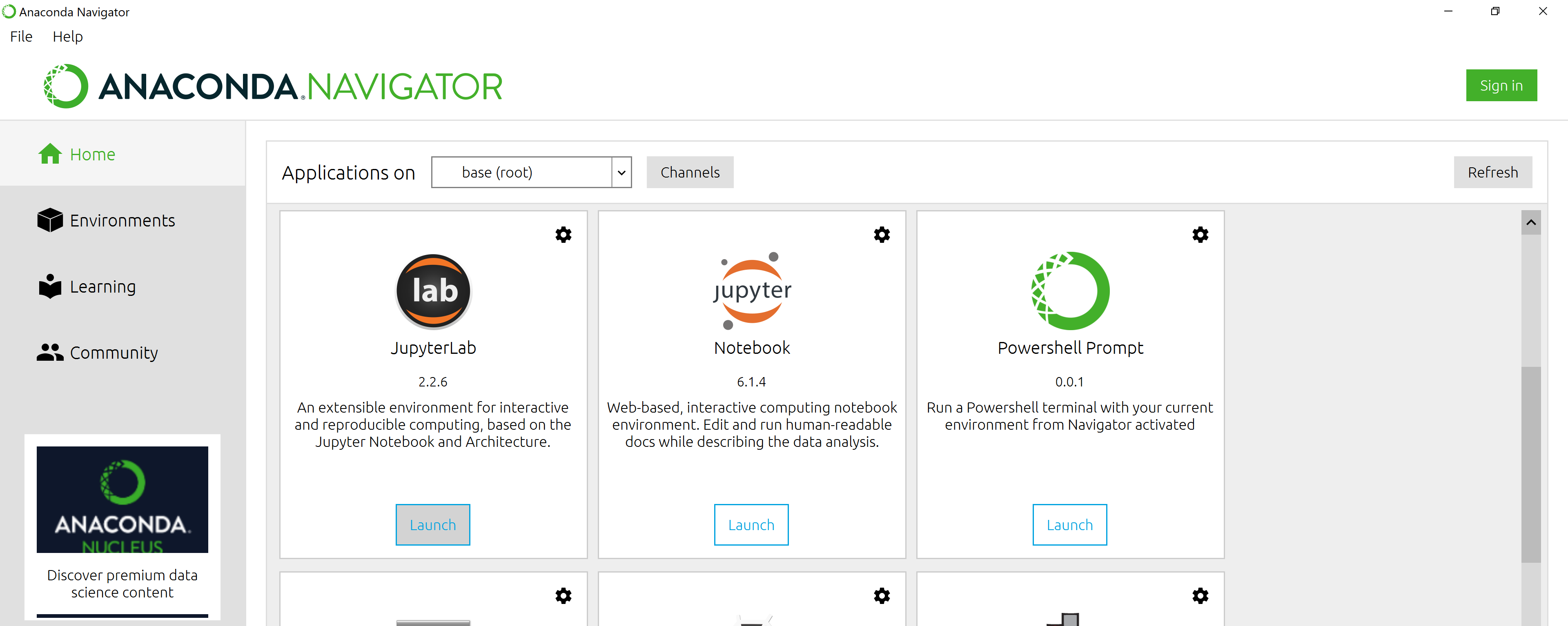

Anaconda Navigator

To start a JupyterLab server from Anaconda Navigator you must first

start

Anaconda Navigator (click for detailed instructions on macOS, Windows,

and Linux). You can search for Anaconda Navigator via Spotlight on

macOS (Command + spacebar), the Windows search

function (Windows Logo Key) or opening a terminal shell and

executing the anaconda-navigator executable from the

command line.

After you have launched Anaconda Navigator, click the

Launch button under JupyterLab. You may need to scroll down

to find it.

Here is a screenshot of an Anaconda Navigator page similar to the one that should open on either macOS or Windows.

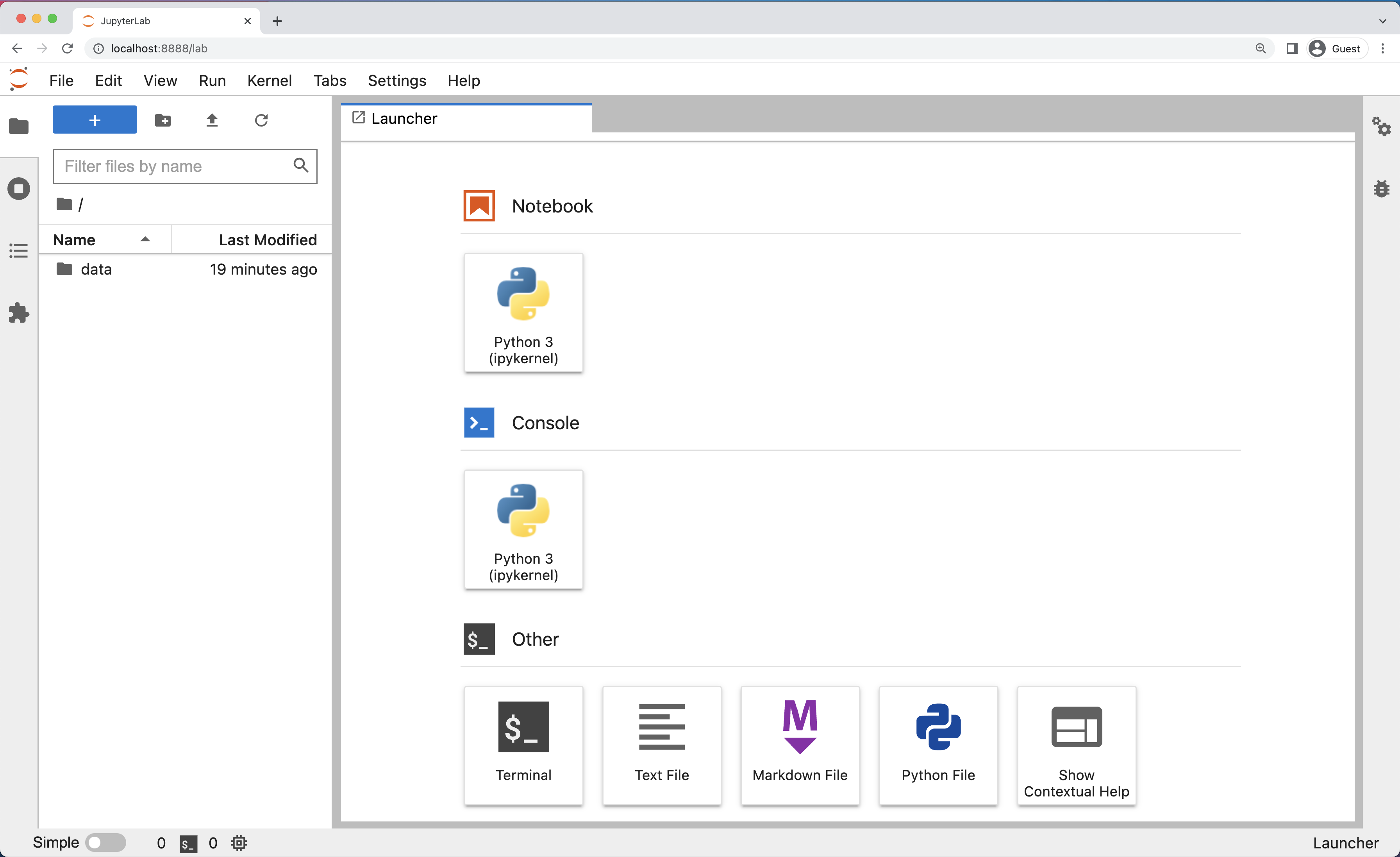

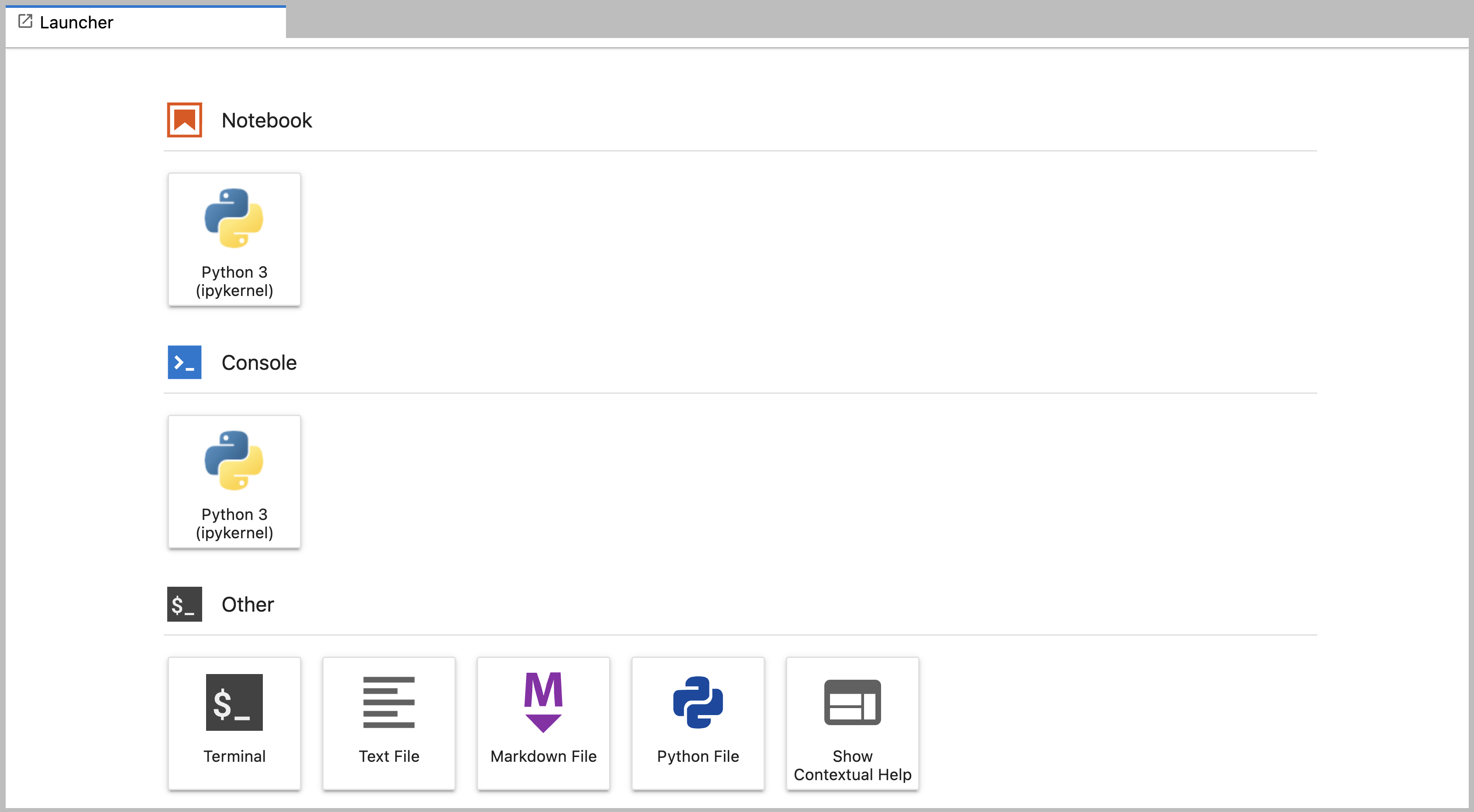

And here is a screenshot of a JupyterLab landing page that should be similar to the one that opens in your default web browser after starting the JupyterLab server on either macOS or Windows.

The JupyterLab Interface

JupyterLab has many features found in traditional integrated development environments (IDEs) but is focused on providing flexible building blocks for interactive, exploratory computing.

The JupyterLab Interface consists of the Menu Bar, a collapsable Left Side Bar, and the Main Work Area which contains tabs of documents and activities.

Menu Bar

The Menu Bar at the top of JupyterLab has the top-level menus that expose various actions available in JupyterLab along with their keyboard shortcuts (where applicable). The following menus are included by default.

- File: Actions related to files and directories such as New, Open, Close, Save, etc. The File menu also includes the Shut Down action used to shutdown the JupyterLab server.

- Edit: Actions related to editing documents and other activities such as Undo, Cut, Copy, Paste, etc.

- View: Actions that alter the appearance of JupyterLab.

- Run: Actions for running code in different activities such as notebooks and code consoles (discussed below).

- Kernel: Actions for managing kernels. Kernels in Jupyter will be explained in more detail below.

- Tabs: A list of the open documents and activities in the main work area.

- Settings: Common JupyterLab settings can be configured using this menu. There is also an Advanced Settings Editor option in the dropdown menu that provides more fine-grained control of JupyterLab settings and configuration options.

- Help: A list of JupyterLab and kernel help links.

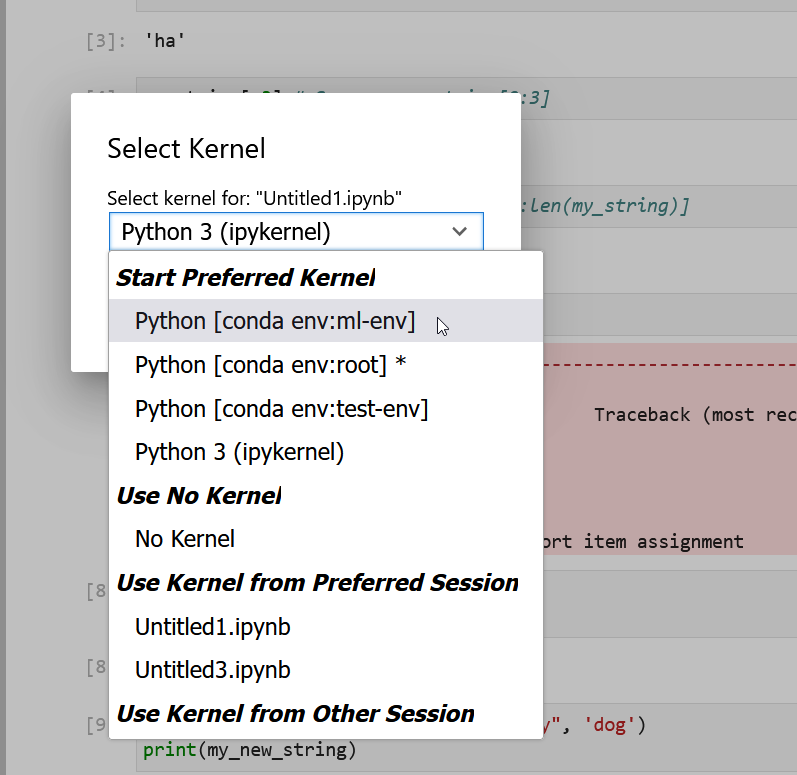

Kernels

The JupyterLab docs define kernels as “separate processes started by the server that run your code in different programming languages and environments.” When we open a Jupyter Notebook, that starts a kernel - a process - that is going to run the code. In this lesson, we’ll be using the Jupyter ipython kernel which lets us run Python 3 code interactively.

Using other Jupyter kernels for other programming languages would let us write and execute code in other programming languages in the same JupyterLab interface, like R, Java, Julia, Ruby, JavaScript, Fortran, etc.

A screenshot of the default Menu Bar is provided below.

Left Sidebar

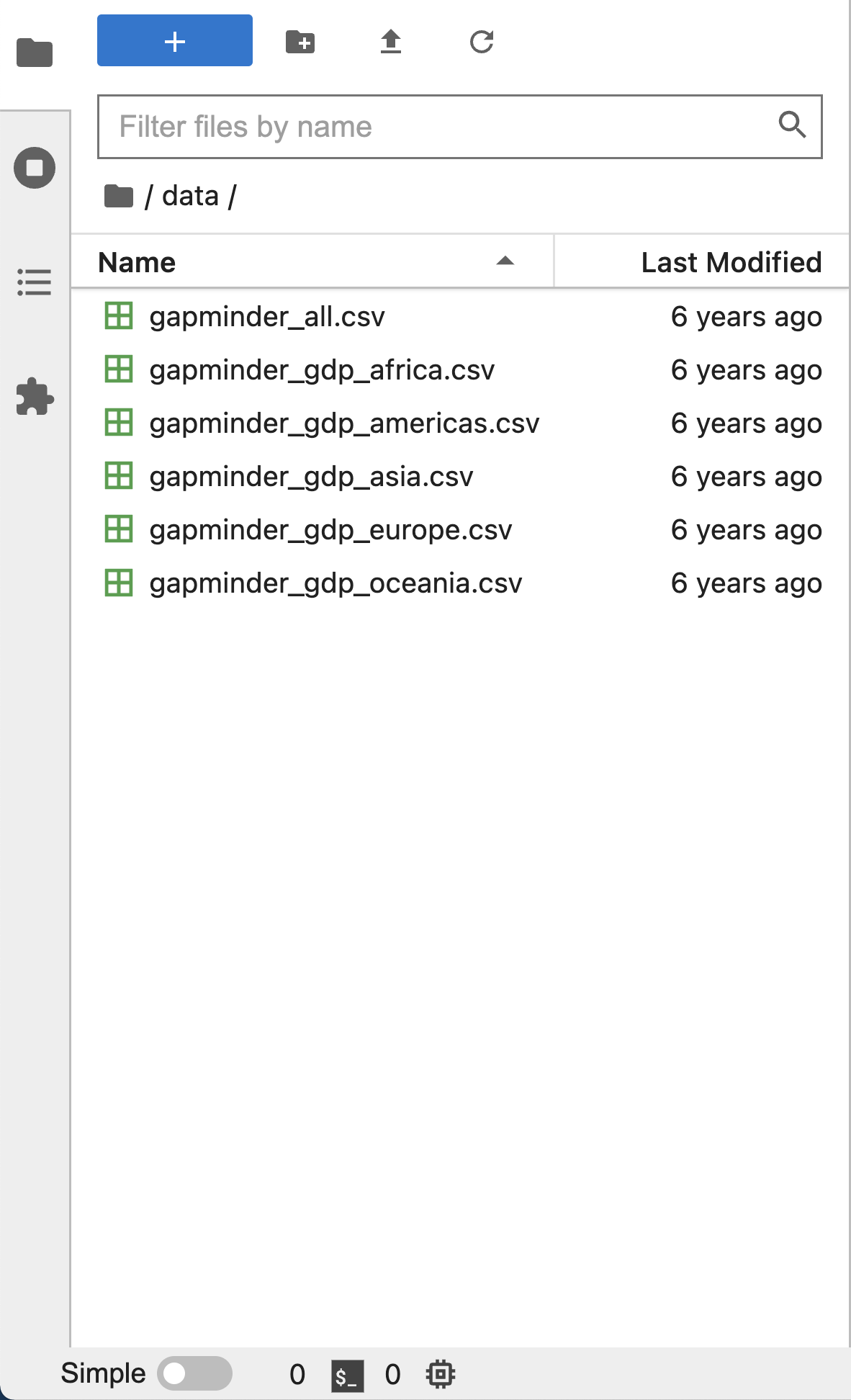

The left sidebar contains a number of commonly used tabs, such as a file browser (showing the contents of the directory where the JupyterLab server was launched), a list of running kernels and terminals, the command palette, and a list of open tabs in the main work area. A screenshot of the default Left Side Bar is provided below.

The left sidebar can be collapsed or expanded by selecting “Show Left Sidebar” in the View menu or by clicking on the active sidebar tab.

Main Work Area

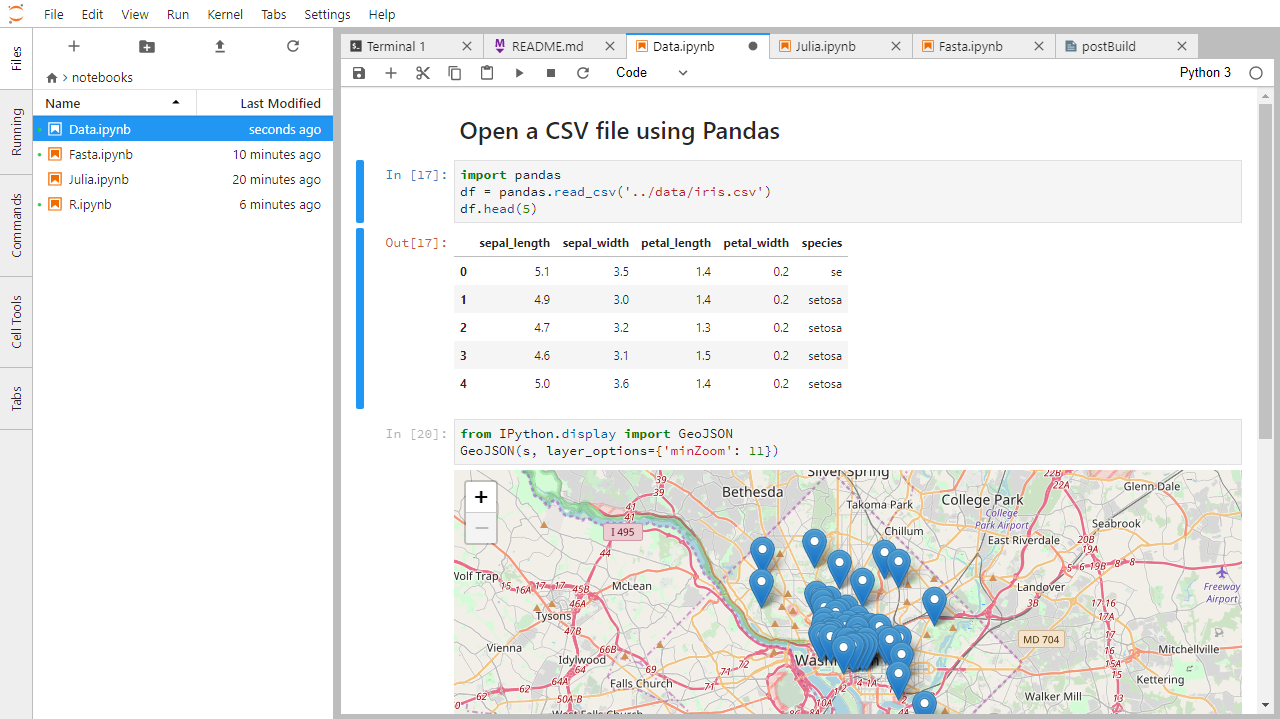

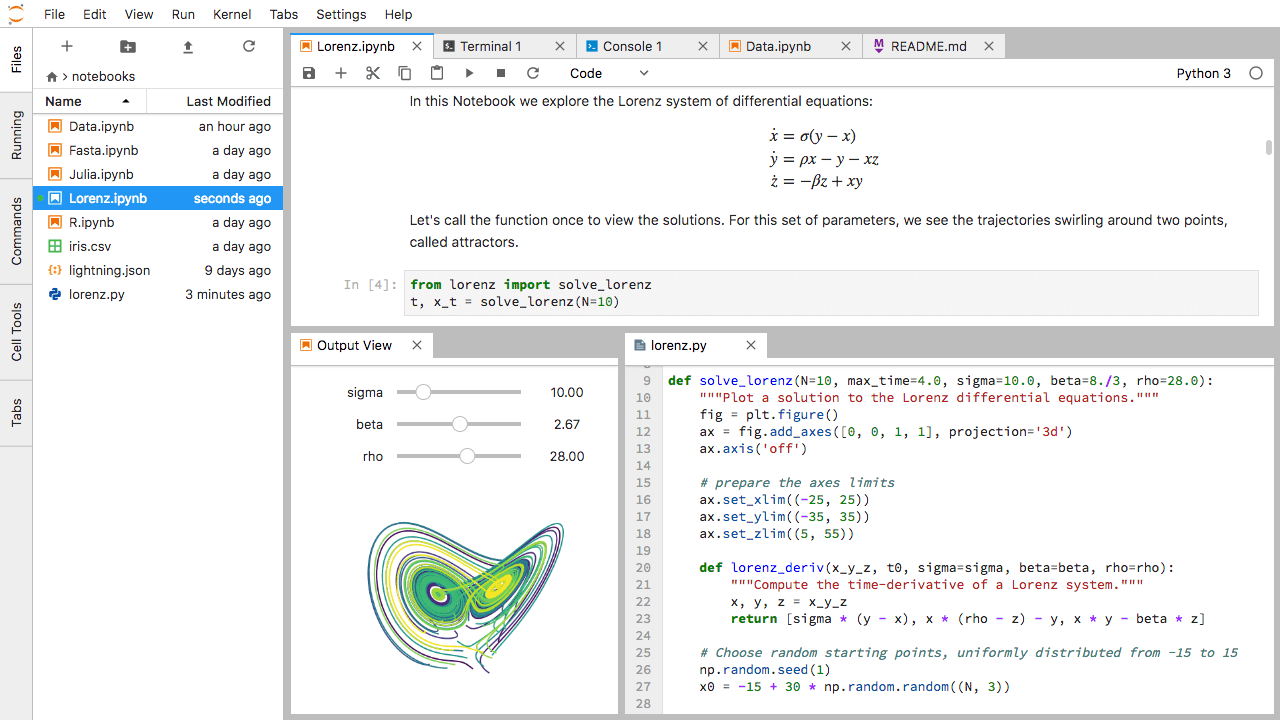

The main work area in JupyterLab enables you to arrange documents (notebooks, text files, etc.) and other activities (terminals, code consoles, etc.) into panels of tabs that can be resized or subdivided. A screenshot of the default Main Work Area is provided below.

Drag a tab to the center of a tab panel to move the tab to the panel. Subdivide a tab panel by dragging a tab to the left, right, top, or bottom of the panel. The work area has a single current activity. The tab for the current activity is marked with a colored top border (blue by default).

Creating a Python script

- To start writing a new Python program click the Text File icon under

the Other header in the Launcher tab of the Main Work Area.

- You can also create a new plain text file by selecting the New -> Text File from the File menu in the Menu Bar.

- To convert this plain text file to a Python program, select the

Save File As action from the File menu in the Menu Bar

and give your new text file a name that ends with the

.pyextension.- The

.pyextension lets everyone (including the operating system) know that this text file is a Python program. - This is convention, not a requirement.

- The

Creating a Jupyter Notebook

To open a new notebook click the Python 3 icon under the Notebook header in the Launcher tab in the main work area. You can also create a new notebook by selecting New -> Notebook from the File menu in the Menu Bar.

Additional notes on Jupyter notebooks.

- Notebook files have the extension

.ipynbto distinguish them from plain-text Python programs. - Notebooks can be exported as Python scripts that can be run from the command line.

Below is a screenshot of a Jupyter notebook running inside JupyterLab. If you are interested in more details, then see the official notebook documentation.

How It’s Stored

- The notebook file is stored in a format called JSON.

- Just like a webpage, what’s saved looks different from what you see in your browser.

- But this format allows Jupyter to mix source code, text, and images, all in one file.

Arranging Documents into Panels of Tabs

In the JupyterLab Main Work Area you can arrange documents into panels of tabs. Here is an example from the official documentation.

First, create a text file, Python console, and terminal window and arrange them into three panels in the main work area. Next, create a notebook, terminal window, and text file and arrange them into three panels in the main work area. Finally, create your own combination of panels and tabs. What combination of panels and tabs do you think will be most useful for your workflow?

After creating the necessary tabs, you can drag one of the tabs to the center of a panel to move the tab to the panel; next you can subdivide a tab panel by dragging a tab to the left, right, top, or bottom of the panel.

Code vs. Text

Jupyter mixes code and text in different types of blocks, called cells. We often use the term “code” to mean “the source code of software written in a language such as Python”. A “code cell” in a Notebook is a cell that contains software; a “text cell” is one that contains ordinary prose written for human beings.

The Notebook has Command and Edit modes.

- If you press Esc and Return alternately, the outer border of your code cell will change from gray to blue.

- These are the Command (gray) and Edit (blue) modes of your notebook.

- Command mode allows you to edit notebook-level features, and Edit mode changes the content of cells.

- When in Command mode (esc/gray),

- The b key will make a new cell below the currently selected cell.

- The a key will make one above.

- The x key will delete the current cell.

- The z key will undo your last cell operation (which could be a deletion, creation, etc).

- All actions can be done using the menus, but there are lots of keyboard shortcuts to speed things up.

Command Vs. Edit

In the Jupyter notebook page are you currently in Command or Edit

mode?

Switch between the modes. Use the shortcuts to generate a new cell. Use

the shortcuts to delete a cell. Use the shortcuts to undo the last cell

operation you performed.

Command mode has a grey border and Edit mode has a blue border. Use Esc and Return to switch between modes. You need to be in Command mode (Press Esc if your cell is blue). Type b or a. You need to be in Command mode (Press Esc if your cell is blue). Type x. You need to be in Command mode (Press Esc if your cell is blue). Type z.

Use the keyboard and mouse to select and edit cells.

- Pressing the Return key turns the border blue and engages Edit mode, which allows you to type within the cell.

- Because we want to be able to write many lines of code in a single cell, pressing the Return key when in Edit mode (blue) moves the cursor to the next line in the cell just like in a text editor.

- We need some other way to tell the Notebook we want to run what’s in the cell.

- Pressing Shift+Return together will execute the contents of the cell.

- Notice that the Return and Shift keys on the right of the keyboard are right next to each other.

The Notebook will turn Markdown into pretty-printed documentation.

- Notebooks can also render Markdown.

- A simple plain-text format for writing lists, links, and other things that might go into a web page.

- Equivalently, a subset of HTML that looks like what you’d send in an old-fashioned email.

- Turn the current cell into a Markdown cell by entering the Command mode (Esc/gray) and press the M key.

-

In [ ]:will disappear to show it is no longer a code cell and you will be able to write in Markdown. - Turn the current cell into a Code cell by entering the Command mode (Esc/gray) and press the y key.

Markdown does most of what HTML does.

* Use asterisks

* to create

* bullet lists.- Use asterisks

- to create

- bullet lists.

1. Use numbers

1. to create

1. numbered lists.- Use numbers

- to create

- numbered lists.

* You can use indents

* To create sublists

* of the same type

* Or sublists

1. Of different

1. types- You can use indents

- To create sublists

- of the same type

- Or sublists

- Of different

- types

Line breaks

don't matter.

But blank lines

create new paragraphs.Line breaks don’t matter.

But blank lines create new paragraphs.

[Create links](http://software-carpentry.org) with `[...](...)`.

Or use [named links][data_carpentry].

[data_carpentry]: http://datacarpentry.orgCreate links with

[...](...). Or use named

links.

Creating Lists in Markdown

Create a nested list in a Markdown cell in a notebook that looks like this:

- Get funding.

- Do work.

- Design experiment.

- Collect data.

- Analyze.

- Write up.

- Publish.

This challenge integrates both the numbered list and bullet list. Note that the bullet list is indented 2 spaces so that it is inline with the items of the numbered list.

1. Get funding.

1. Do work.

* Design experiment.

* Collect data.

* Analyze.

1. Write up.

1. Publish.Python returns the output of the last calculation.

OUTPUT

3Change an Existing Cell from Code to Markdown

What happens if you write some Python in a code cell and then you switch it to a Markdown cell? For example, put the following in a code cell:

And then run it with Shift+Return to be sure that it works as a code cell. Now go back to the cell and use Esc then m to switch the cell to Markdown and “run” it with Shift+Return. What happened and how might this be useful?

Equations

Standard Markdown (such as we’re using for these notes) won’t render equations, but the Notebook will. Create a new Markdown cell and enter the following:

$\sum_{i=1}^{N} 2^{-i} \approx 1$(It’s probably easier to copy and paste.) What does it display? What

do you think the underscore, _, circumflex, ^,

and dollar sign, $, do?

The notebook shows the equation as it would be rendered from LaTeX

equation syntax. The dollar sign, $, is used to tell

Markdown that the text in between is a LaTeX equation. If you’re not

familiar with LaTeX, underscore, _, is used for subscripts

and circumflex, ^, is used for superscripts. A pair of

curly braces, { and }, is used to group text

together so that the statement i=1 becomes the subscript

and N becomes the superscript. Similarly, -i

is in curly braces to make the whole statement the superscript for

2. \sum and \approx are LaTeX

commands for “sum over” and “approximate” symbols.

Closing JupyterLab

- From the Menu Bar select the “File” menu and then choose “Shut Down” at the bottom of the dropdown menu. You will be prompted to confirm that you wish to shutdown the JupyterLab server (don’t forget to save your work!). Click “Shut Down” to shutdown the JupyterLab server.

- To restart the JupyterLab server you will need to re-run the following command from a shell.

$ jupyter labClosing JupyterLab

Practice closing and restarting the JupyterLab server.

Content from Variables in Python

Last updated on 2023-04-18 | Edit this page

Overview

Questions

- How do I run Python code?

- What are variables?

- How do I set variables in Python?

Objectives

- Use the Python console to perform calculations.

- Assign values to variables in Python.

- Reuse variables in Python.

Key Points

- Python is an interpreted programming language, and can be used interactively.

-

Values are assigned to variables

in Python using

=. - You can use

printto output variable values. - Use meaningful variable names.

Variables store values.

- Variables are names for values.

- In Python the

=symbol assigns the value on the right to the name on the left. - The variable is created when a value is assigned to it.

- Here, Python assigns an age to a variable

ageand a name in quotes to a variablefirst_name.

- Variable names

- can only contain letters, digits, and underscore

_(typically used to separate words in long variable names) - cannot start with a digit

- are case sensitive (age, Age and AGE are three different variables)

- can only contain letters, digits, and underscore

- Variable names that start with underscores like

__alistairs_real_agehave a special meaning so we won’t do that until we understand the convention.

Use print to display values.

Callout

A string is the type which stores text in Python. Strings can be thought of as sequences or strings of individual characters (and using the words string this way actually goes back to a printing term in the pre-computer era). We will be learning more about types and strings in other lessons.

- Python has a built-in function called

printthat prints things as text. - Call the function (i.e., tell Python to run it) by using its name.

- Provide values to the function (i.e., the things to print) in parentheses.

- To add a string to the printout, wrap the string in single or double quotes.

- The values passed to the function are called arguments

Single vs. double quotes

In Python, you can use single quotes or double quotes to denote a string, but you need to use the same one for both the beginning and the end.

In Python,

OUTPUT

Ahmed is 42 years old-

printautomatically puts a single space between items to separate them.

In when using Jupyter notebooks, we can also simply write a variable name and its value will be displayed:

OUTPUT

42However, this will not work in other programming environments or when running scripts. We will be displaying variables using both methods throughout this workshop.

Variables must be created before they are used.

- If a variable doesn’t exist yet, or if the name has been mis-spelled, Python reports an error. (Unlike some languages, which “guess” a default value.)

ERROR

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-1-c1fbb4e96102> in <module>()

----> 1 print(last_name)

NameError: name 'last_name' is not defined- The last line of an error message is usually the most informative.

- We will look at error messages in detail later.

Variables Persist Between Cells

Be aware that it is the order of execution of cells that is important in a Jupyter notebook, not the order in which they appear. Python will remember all the code that was run previously, including any variables you have defined, irrespective of the order in the notebook. Therefore if you define variables lower down the notebook and then (re)run cells further up, those defined further down will still be present. As an example, create two cells with the following content, in this order:

If you execute this in order, the first cell will give an error.

However, if you run the first cell after the second cell it

will print out 1. To prevent confusion, it can be helpful

to use the Kernel -> Restart & Run All

option which clears the interpreter and runs everything from a clean

slate going top to bottom.

Variables can be used in calculations.

- We can use variables in calculations just as if they were values.

- Remember, we assigned the value

42toagea few lines ago.

- Remember, we assigned the value

OUTPUT

Age in three years: 45Python is case-sensitive.

- Python thinks that upper- and lower-case letters are different, so

Nameandnameare different variables. - There are conventions for using upper-case letters at the start of variable names so we will use lower-case letters for now.

Use meaningful variable names.

- Python doesn’t care what you call variables as long as they obey the rules (alphanumeric characters and the underscore).

- Use meaningful variable names to help other people understand what the program does.

- The most important “other person” is your future self.

Try using this python visualization tool to visualize what happens in the code.

OUTPUT

# Command # Value of x # Value of y # Value of swap #

x = 1.0 # 1.0 # not defined # not defined #

y = 3.0 # 1.0 # 3.0 # not defined #

swap = x # 1.0 # 3.0 # 1.0 #

x = y # 3.0 # 3.0 # 1.0 #

y = swap # 3.0 # 1.0 # 1.0 #These three lines exchange the values in x and

y using the swap variable for temporary

storage. This is a fairly common programming idiom.

Choosing a Name

Which is a better variable name, m, min, or

minutes? Why?

Hint: think about which code you would rather inherit from someone who is leaving the lab:

ts = m * 60 + stot_sec = min * 60 + sectotal_seconds = minutes * 60 + seconds

minutes is better because min might mean

something like “minimum” (and actually is an existing built-in function

in Python that we will cover later).

Content from Basic Types

Last updated on 2023-04-18 | Edit this page

Overview

Questions

- What kinds of data are there in Python?

- How are different data types treated differently?

- How can I identify a variable’s type?

- How can I convert between types?

Objectives

- Explain key differences between integers and floating point numbers.

- Explain key differences between numbers and character strings.

- Use built-in functions to convert between integers, floating point numbers, and strings.

- Subset a string using slicing.

Key Points

- Every value has a type.

- Use the built-in function

typeto find the type of a value. - Types control what operations can be done on values.

- Strings can be added and multiplied.

- Strings have a length (but numbers don’t).

Every value has a type.

- Every value in a program has a specific type.

- Integer (

int): represents positive or negative whole numbers like 3 or -512. - Floating point number (

float): represents real numbers like 3.14159 or -2.5. - Character string (usually called “string”,

str): text.- Written in either single quotes or double quotes (as long as they match).

- The quote marks aren’t printed when the string is displayed.

Use the built-in function type to find the type of a

value.

- Use the built-in function

typeto find out what type a value has. - Works on variables as well.

- But remember: the value has the type — the variable is just a label.

OUTPUT

<class 'int'>OUTPUT

<class 'str'>Types control what operations (or methods) can be performed on a given value.

- A value’s type determines what the program can do to it.

OUTPUT

2OUTPUT

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-2-67f5626a1e07> in <module>()

----> 1 print('hello' - 'h')

TypeError: unsupported operand type(s) for -: 'str' and 'str'Simple and compound types.

We can broadly split different types into two categories in Python: simple types and compound types.

Simple types consist of a single value. In Python, these simple types include:

intfloatboolNoneType

Compound types contain multiple values. Compound types include:

strlistdictionarytupleset

In this lesson we will be learning about simple types and strings

(str), which are made up of multiple characters. We will go

into more detail on other compound types in future lessons.

You can use the “+” and “*” operators on strings.

- “Adding” character strings concatenates them.

OUTPUT

Ahmed WalshThe empty string

We can initialize a string to contain no letter,

empty = "". This is called the empty

string, and is often used when we want to build up a string

character by character

- Multiplying a character string by an integer N creates a

new string that consists of that character string repeated N

times.

- Since multiplication is repeated addition.

OUTPUT

==========Strings have a length (but numbers don’t).

- The built-in function

lencounts the number of characters in a string.

OUTPUT

11- But numbers don’t have a length (not even zero).

OUTPUT

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-3-f769e8e8097d> in <module>()

----> 1 print(len(52))

TypeError: object of type 'int' has no len()You must convert numbers to strings or vice versa when operating on them.

- Cannot add numbers and strings.

ERROR

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-4-fe4f54a023c6> in <module>()

----> 1 print(1 + '2')

TypeError: unsupported operand type(s) for +: 'int' and 'str'- The result here would be

3if both values were consideredints, and'12'if both values were considered strings. Due to this ambiguity addition is not allowed between strings and integers. - Some types can be converted to other types by using the type name as a function.

OUTPUT

3

12You can mix integers and floats freely in operations.

- Integers and floating-point numbers can be mixed in arithmetic.

- Python 3 automatically converts integers to floats as needed.

OUTPUT

half is 0.5

three squared is 9.0Variables only change value when something is assigned to them.

- If we make one cell in a spreadsheet depend on another, and update the latter, the former updates automatically.

- This does not happen in programming languages.

PYTHON

variable_one = 1

variable_two = 5 * variable_one

variable_one = 2

print('first is', variable_one, 'and second is', variable_two)OUTPUT

first is 2 and second is 5- The computer reads the value of

variable_onewhen doing the multiplication, creates a new value, and assigns it tovariable_two. - Afterwards, the value of

variable_twois set to the new value and not dependent onvariable_oneso its value does not automatically change whenvariable_onechanges.

Fractions

What type of value is 3.4? How can you find out?

Automatic Type Conversion

What type of value is 3.25 + 4?

Choose a Type

What type of value (integer, floating point number, or character string) would you use to represent each of the following? Try to come up with more than one good answer for each problem. For example, in # 1, when would counting days with a floating point variable make more sense than using an integer?

- Number of days since the start of the year.

- Time elapsed from the start of the year until now in days.

- Serial number of a piece of lab equipment.

- A lab specimen’s age

- Current population of a city.

- Average population of a city over time.

The answers to the questions are: 1. Integer, since the number of days would lie between 1 and 365. 2. Floating point, since fractional days are required 3. Character string if serial number contains letters and numbers, otherwise integer if the serial number consists only of numerals 4. This will vary! How do you define a specimen’s age? whole days since collection (integer)? date and time (string)? 5. Choose floating point to represent population as large aggregates (eg millions), or integer to represent population in units of individuals. 6. Floating point number, since an average is likely to have a fractional part.

Division Types

In Python 3, the // operator performs integer

(whole-number) floor division, the / operator performs

floating-point division, and the % (or modulo)

operator calculates and returns the remainder from integer division:

OUTPUT

5 // 3: 1

5 / 3: 1.6666666666666667

5 % 3: 2Imagine that you are buying cages for mice. num_mice is

the number of mice you need cages for, and num_per_cage is

the maximum number of mice which can live in a single cage. Write an

expression that calculates the exact number of cages you need to

purchase.

We want the number of cages to house all of our mice, which is the

rounded up result of num_mice / num_per_cage. This is

equivalent to performing a floor division with // and

adding 1. Before the division we need to subtract 1 from the number of

mice to deal with the case where num_mice is evenly

divisible by num_per_cage.

PYTHON

num_cages = ((num_mice - 1) // num_per_cage) + 1

print(num_mice, 'mice,', num_per_cage, 'per cage:', num_cages, 'cages')OUTPUT

56 mice, 3 per cage: 19 cagesStrings to Numbers

Where reasonable, float() will convert a string to a

floating point number, and int() will convert a floating

point number to an integer:

OUTPUT

string to float: 3.4

float to int: 3Note that converting a float to an int does

not round the result, but instead truncates it by removing everything

past the decimal point.

If the conversion doesn’t make sense, however, an error message will occur.

ERROR

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-5-df3b790bf0a2> in <module>

----> 1 print("string to float:", float("Hello world!"))

ValueError: could not convert string to float: 'Hello world!'What do you expect this program to do? It would not be so

unreasonable to expect the Python 3 int command to convert

the string “3.4” to 3.4 and an additional type conversion to 3. After

all, Python 3 performs a lot of other magic - isn’t that part of its

charm?

ERROR

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-2-ec6729dfccdc> in <module>

----> 1 int("3.4")

ValueError: invalid literal for int() with base 10: '3.4'However, Python 3 throws an error. Why? To be consistent, possibly. If you ask Python to perform two consecutive typecasts, you must convert it explicitly in code.

OUTPUT

3Challenge

Arithmetic with Different Types

Which of the following will return the floating point number

2.0? Note: there may be more than one right answer.

first + float(second)float(second) + float(third)first + int(third)first + int(float(third))int(first) + int(float(third))2.0 * second

Answer: 1 and 4

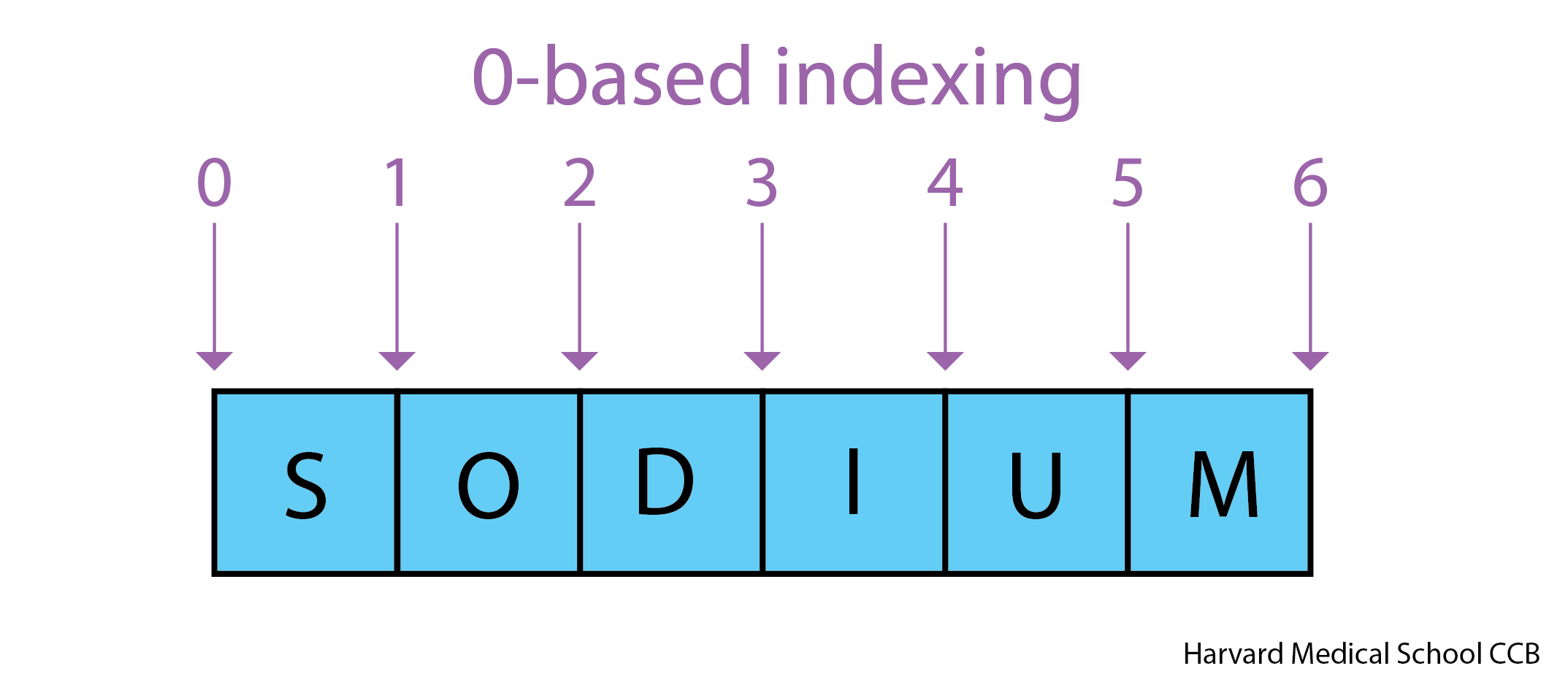

Use an index to get a single character from a string.

- The characters (individual letters, numbers, and so on) in a string

are ordered. For example, the string

'AB'is not the same as'BA'. Because of this ordering, we can treat the string as a list of characters. - Each position in the string (first, second, etc.) is given a number. This number is called an index or sometimes a subscript.

- Indices are numbered from 0.

- Use the position’s index in square brackets to get the character at that position.

OUTPUT

sUse a slice to get a substring.

- A part of a string is called a substring. A substring can be as short as a single character.

- An item in a list is called an element. Whenever we treat a string as if it were a list, the string’s elements are its individual characters.

- Slicing gets a part of a string (or, more generally, a part of any list-like thing).

- Slicing uses the notation

[start:stop], wherestartis the integer index of the first element we want andstopis the integer index of the element just after the last element we want. - The difference between

stopandstartis the slice’s length. - Taking a slice does not change the contents of the original string. Instead, taking a slice returns a copy of part of the original string.

OUTPUT

sodUse the built-in function len to find the length of a

string.

OUTPUT

6- Nested functions are evaluated from the inside out, like in mathematics.

Indexing

If you assign a = 123, what happens if you try to get

the second digit of a via a[1]?

Numbers are not strings or sequences and Python will raise an error

if you try to perform an index operation on a number. In the next

lesson on types and type conversion we will learn more about types

and how to convert between different types. If you want the Nth digit of

a number you can convert it into a string using the str

built-in function and then perform an index operation on that

string.

OUTPUT

TypeError: 'int' object is not subscriptableOUTPUT

2OUTPUT

atom_name[1:3] is: arSlicing concepts

Given the following string:

What would these expressions return?

species_name[2:8]-

species_name[11:](without a value after the colon) -

species_name[:4](without a value before the colon) -

species_name[:](just a colon) species_name[11:-3]species_name[-5:-3]- What happens when you choose a

stopvalue which is out of range? (i.e., tryspecies_name[0:20]orspecies_name[:103])

-

species_name[2:8]returns the substring'acia b' -

species_name[11:]returns the substring'folia', from position 11 until the end -

species_name[:4]returns the substring'Acac', from the start up to but not including position 4 -

species_name[:]returns the entire string'Acacia buxifolia' -

species_name[11:-3]returns the substring'fo', from the 11th position to the third last position -

species_name[-5:-3]also returns the substring'fo', from the fifth last position to the third last - If a part of the slice is out of range, the operation does not fail.

species_name[0:20]gives the same result asspecies_name[0:], andspecies_name[:103]gives the same result asspecies_name[:]

Content from Built-in Functions and Help

Last updated on 2023-04-14 | Edit this page

Overview

Questions

- How can I use built-in functions?

- How can I find out what they do?

- What kind of errors can occur in programs?

Objectives

- Explain the purpose of functions.

- Correctly call built-in Python functions.

- Correctly nest calls to built-in functions.

- Use help to display documentation for built-in functions.

- Correctly describe situations in which SyntaxError and NameError occur.

Key Points

- Use comments to add documentation to programs.

- A function may take zero or more arguments.

- Commonly-used built-in functions include

max,min, andround. - Functions may only work for certain (combinations of) arguments.

- Functions may have default values for some arguments.

- Use the built-in function

helpto get help for a function. - Python reports a syntax error when it can’t understand the source of a program.

- Python reports a runtime error when something goes wrong while a program is executing.

Use comments to add documentation to programs.

PYTHON

# This sentence isn't executed by Python.

adjustment = 0.5 # Neither is this - anything after '#' is ignored.Comments are important for the readability of your code. They are where you can explain what your code is doing, decisions you made, and things to watch out for when using the code.

Well-commented code will be easier to use by others, and by you if you are returning to code you wrote months or years ago.

A function may take zero or more arguments.

- We have seen some functions already — now let’s take a closer look.

- An argument is a value passed into a function.

-

lentakes exactly one. -

int,str, andfloatcreate a new value from an existing one. -

printtakes zero or more. -

printwith no arguments prints a blank line.- Must always use parentheses, even if they’re empty, so that Python knows a function is being called.

OUTPUT

before

afterEvery function returns something.

- Every function call produces some result.

- If the function doesn’t have a useful result to return, it usually

returns the special value

None.Noneis a Python object that stands in anytime there is no value.

Note that even though we set the result of print equal to a variable, printing still occurs.

OUTPUT

example

result of print is NoneCommonly-used built-in functions include max,

min, and round.

- Use

maxto find the largest value of one or more values. - Use

minto find the smallest. - Both work on character strings as well as numbers.

- “Larger” and “smaller” use (0-9, A-Z, a-z) to compare letters.

OUTPUT

3

0Functions may only work for certain (combinations of) arguments.

-

maxandminmust be given at least one argument.- “Largest of the empty set” is a meaningless question.

- And they must be given things that can meaningfully be compared.

ERROR

TypeError Traceback (most recent call last)

<ipython-input-52-3f049acf3762> in <module>

----> 1 print(max(1, 'a'))

TypeError: '>' not supported between instances of 'str' and 'int'Functions may have default values for some arguments.

-

roundwill round off a floating-point number. - By default, rounds to zero decimal places.

OUTPUT

4- We can specify the number of decimal places we want.

OUTPUT

3.7Functions attached to objects are called methods

- Functions take another form that will be common in the pandas episodes.

- Methods have parentheses like functions, but come after the variable.

- Some methods are used for internal Python operations, and are marked with double underlines.

PYTHON

my_string = 'Hello world!' # creation of a string object

print(len(my_string)) # the len function takes a string as an argument and returns the length of the string

print(my_string.swapcase()) # calling the swapcase method on the my_string object

print(my_string.__len__()) # calling the internal __len__ method on the my_string object, used by len(my_string)OUTPUT

12

hELLO WORLD!

12- You might even see them chained together. They operate left to right.

PYTHON

print(my_string.isupper()) # Not all the letters are uppercase

print(my_string.upper()) # This capitalizes all the letters

print(my_string.upper().isupper()) # Now all the letters are uppercaseOUTPUT

False

HELLO WORLD

TrueUse the built-in function help to get help for a

function.

- Every built-in function has online documentation.

OUTPUT

Help on built-in function round in module builtins:

round(number, ndigits=None)

Round a number to a given precision in decimal digits.

The return value is an integer if ndigits is omitted or None. Otherwise

the return value has the same type as the number. ndigits may be negative.The Jupyter Notebook has two ways to get help.

- Option 1: Place the cursor near where the function is invoked in a

cell (i.e., the function name or its parameters),

- Hold down Shift, and press Tab.

- Do this several times to expand the information returned.

- Option 2: Type the function name in a cell with a question mark after it. Then run the cell.

Python reports a syntax error when it can’t understand the source of a program.

- Won’t even try to run the program if it can’t be parsed.

ERROR

File "<ipython-input-56-f42768451d55>", line 2

name = 'Feng

^

SyntaxError: EOL while scanning string literalERROR

File "<ipython-input-57-ccc3df3cf902>", line 2

age = = 52

^

SyntaxError: invalid syntax- Look more closely at the error message:

ERROR

File "<ipython-input-6-d1cc229bf815>", line 1

print ("hello world"

^

SyntaxError: unexpected EOF while parsing- The message indicates a problem on first line of the input (“line

1”).

- In this case the “ipython-input” section of the file name tells us that we are working with input into IPython, the Python interpreter used by the Jupyter Notebook.

- The

-6-part of the filename indicates that the error occurred in cell 6 of our Notebook. - Next is the problematic line of code, indicating the problem with a

^pointer.

Python reports a runtime error when something goes wrong while a program is executing.

ERROR

NameError Traceback (most recent call last)

<ipython-input-59-1214fb6c55fc> in <module>

1 age = 53

----> 2 remaining = 100 - aege # mis-spelled 'age'

NameError: name 'aege' is not defined- Fix syntax errors by reading the source and runtime errors by tracing execution.

What Happens When

- Order of operations:

1.1 * radiance = 1.11.1 - 0.5 = 0.6min(radiance, 0.6) = 0.62.0 + 0.6 = 2.6max(2.1, 2.6) = 2.6

- At the end,

radiance = 2.6

Spot the Difference

- Predict what each of the

printstatements in the program below will print. - Does

max(len(rich), poor)run or produce an error message? If it runs, does its result make any sense?

OUTPUT

cmax for strings ranks them alphabetically.

OUTPUT

tinOUTPUT

4max(len(rich), poor) throws a TypeError. This turns into

max(4, 'tin') and as we discussed earlier a string and

integer cannot meaningfully be compared.

ERROR

TypeError Traceback (most recent call last)

<ipython-input-65-bc82ad05177a> in <module>

----> 1 max(len(rich), poor)

TypeError: '>' not supported between instances of 'str' and 'int'Why Not?

Why is it that max and min do not return

None when they are called with no arguments?

max and min return TypeErrors in this case

because the correct number of parameters was not supplied. If it just

returned None, the error would be much harder to trace as

it would likely be stored into a variable and used later in the program,

only to likely throw a runtime error.

Last Character of a String

If Python starts counting from zero, and len returns the

number of characters in a string, what index expression will get the

last character in the string name? (Note: we will see a

simpler way to do this in a later episode.)

Explore the Python docs!

The official Python documentation is arguably the most complete source of information about the language. It is available in different languages and contains a lot of useful resources. The Built-in Functions page contains a catalogue of all of these functions, including the ones that we’ve covered in this lesson. Some of these are more advanced and unnecessary at the moment, but others are very simple and useful.

Content from String Manipulation

Last updated on 2023-04-27 | Edit this page

Overview

Questions

- How can I manipulate text?

- How can I create neat and dynamic text output?

Objectives

- Extract substrings of interest.

- Format dynamic strings using f-strings.

- Explore Python’s built-in string functions

Key Points

- Strings can be indexed and sliced.

- Strings cannot be directly altered.

- You can build complex strings based on other variables using f-strings and format.

- Python has a variety of useful built-in string functions.

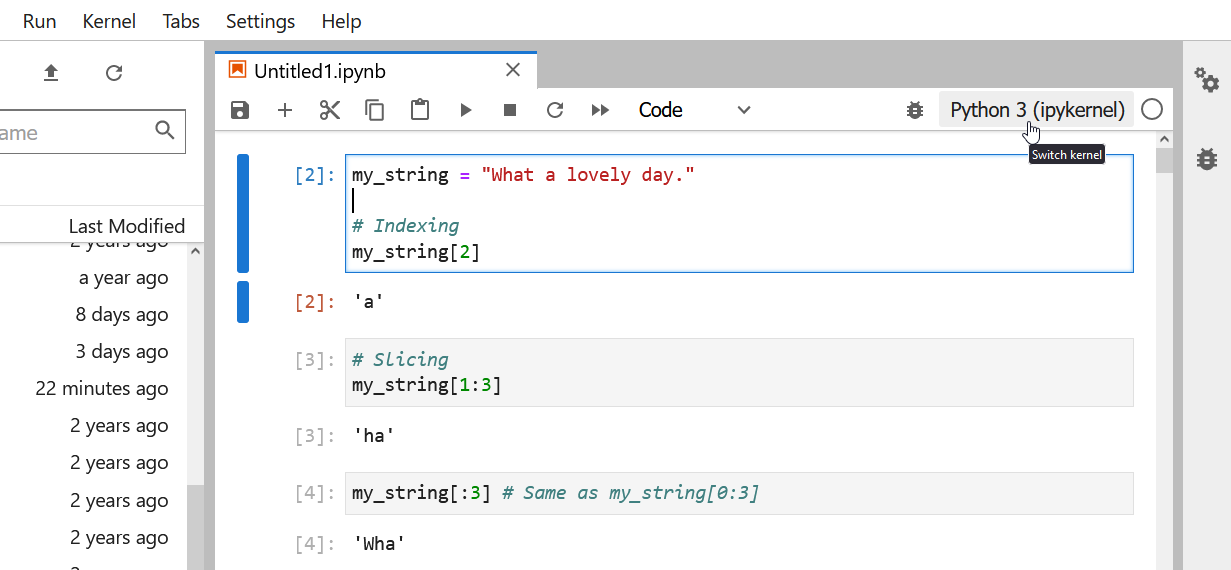

Strings can be indexed and sliced.

As we saw in class during the types lesson, strings in Python can be indexed and sliced

OUTPUT

'a'OUTPUT

'ha'OUTPUT

'Wha'OUTPUT

'hat a lovely day.'Strings are immutable.

We will talk about this concept in more detail when we explore lists. However, for now it is important to note that strings, like simple types, cannot have their values be altered in Python. Instead, a new value is created.

For simple types, this behavior isn’t that noticable:

PYTHON

x = 10

# While this line appears to be changing the value 10 to 11, in reality a new integer with the value 11 is created and assigned to x.

x = x + 1

xOUTPUT

11However, for strings we can easily cause errors if we attempt to change them directly:

ERROR

---------------------------------------------------------------------

TypeError Traceback (most recent call last)

Input In [6], in <cell line: 1>()

----> 1 my_string[-1] = "g"

TypeError: 'str' object does not support item assignmentThus, we need to learn ways in Python to manipulate and build strings. In this instance, we can build a new string using indexing:

OUTPUT

'What a lovely dag'Or use the built-in function str.replace() if we wanted

to replace a larger portion of the string.

OUTPUT

What a lovely dog.Build complex strings based on other variables using format.

What if we want to use values inside of a string? For instance, say we want to print a sentence denoting how many and the percent of samples we dropped and kept as a part of a quality control analysis.

Suppose we have variables denoting how many samples and dropped samples we have:

PYTHON

good_samples = 964

bad_samples = 117

percent_dropped = bad_samples/(good_samples + bad_samples)One option would be to simply put everything in

print:

OUTPUT

Dropped 0.10823311748381129 percent samplesOr we could convert and use addition:

OUTPUT

Dropped 0.10823311748381129% samplesHowever, both of these options don’t give us as much control over how the percent is displayed. Python uses systems called string formatting and f-strings to give us greater control.

We can use Python’s built-in format function to create

better-looking text:

PYTHON

print('Dropped {0:.2%} of samples, with {1:n} samples remaining.'.format(percent_dropped, good_samples))OUTPUT

Dropped 10.82% of samples, with 964 samples remaining.Calls to format have a number of components:

- The use of brackets

{}to create replacement fields. - The index inside each bracket (0 and 1) to denote the index of the variable to use.

- Format instructions.

.2%indicates that we want to format the number as a percent with 2 decimal places.nindicates that we want to format as a number. -

formatwe call format on the string, and as arguments give it the variables we want to use. The order of variables here are the indices referenced in replacement fields.

For instance, we can switch or repeat indices:

PYTHON

print('Dropped {1:.2%} of samples, with {0:n} samples remaining.'.format(percent_dropped, good_samples))

print('Dropped {0:.2%} of samples, with {0:n} samples remaining.'.format(percent_dropped, good_samples))OUTPUT

Dropped 96400.00% of samples, with 964 samples remaining.

Dropped 10.82% of samples, with 0.108233 samples remaining.Python has a shorthand for using format called

f-strings. These strings let us directly use variables and

create expressions inside of strings. We denote an f-string

by putting f in front of the string definition:

PYTHON

print(f'Dropped {percent_dropped:.2%} of samples, with {good_samples:n} samples remaining.')

print(f'Dropped {100*(bad_samples/(good_samples + bad_samples)):.2f}% of samples, with {good_samples:n} samples remaining.')OUTPUT

Dropped 10.82% of samples, with 964 samples remaining.

Dropped 10.82% of samples, with 964 samples remaining.Here,

{100*(bad_samples/(good_samples + bad_samples)):.2f} is

treated as an expression, and then printed as a float

with 2 digits.

Full documenation on all of the string formatting mini-language can be found here.

Python has many useful built-in functions for string manipulation.

Python has many built-in methods for manipulating strings; simple and powerful text manipulation is considered one of Python’s strengths. We will go over some of the more common and useful functions here, but be aware that there are many more you can find in the official documentation.

Dealing with whitespace

str.strip() strips the whitespace from the beginning and

ending of a string. This is especially useful when reading in files

which might have hidden spaces or tabs at the end of lines.

Note that \t denotes a tab character.

OUTPUT

'a'str.split() strips a string up into a list of strings by

some character. By default it uses whitespace, but we can give any set

character to split by. We will learn how to use lists in the next

session.

OUTPUT

['My',

'favorite',

'sentence',

'I',

'hope',

'nothing',

'bad',

'happens',

'to',

'it']OUTPUT

['2023', '04', '12']Pattern matching

str.find() find the first occurrence of the specified

string inside the search string, and str.rfind() finds the

last.

OUTPUT

11OUTPUT

38str.startswith() and str.endswith() perform

a simlar function but return a bool based on whether or not

the string starts or ends with a particular string.

OUTPUT

FalseOUTPUT

TrueCase

str.upper(), str.lower(),

str.capitalize(), and str.title() all change

the capitalization of strings.

PYTHON

my_string = "liters (coffee) per minute"

print(my_string.lower())

print(my_string.upper())

print(my_string.capitalize())

print(my_string.title())OUTPUT

liters (coffee) per minute

LITERS (COFFEE) PER MINUTE

Liters (coffee) per minute

Liters (Coffee) Per MinuteChallenge

A common problem when analyzing data is that multiple features of the data will be stored as a column name or single string.

For instance, consider the following column headers:

WT_0Min_1 WT_0Min_2 X.RAB7A_0Min_1 X.RAB7A_0Min_2 WT_5Min_1 WT_5Min_2 X.RAB7A_5Min_1 X.RAB7A_5Min_2 X.NPC1_5Min_1 X.NPC1_5Min_2There are two variables of interest, the time, 0, 5, or 60 minutes post-infection, and the genotype, WT, NPC1 knockout and RAB7A knockout. We also have replicate information at the end of each column header. For now, let’s just try extracting the timepoint, genotype, and replicate from a single column header.

Given the string:

Try to print the string:

Sample is 0min RABA7A knockout, replicate 1. Using f-strings, slicing and indexing, and built-in string functions.

You can try to use lists as a challenge, but its fine to instead get

each piece of information separately from sample_info.

Content from Using Objects

Last updated on 2023-04-20 | Edit this page

Overview

Questions

- What is an object?

Objectives

- Define objects.

- Use an object’s methods.

- Call a constructor for an object.

Key Points

- Objects are entities with both data and methods

- Methods are unique to objects, and so methods with the same name may work differently on different objects.

- You can create an object using a constructor.

- Objects need to be explicitly copied.

Objects are entities with both data and methods

In addition to basic types, Python also has objects we refer to the type of an object as its class. In other programming languages there is a more definite distinction between base types and objects, but in Python these terms essentially interchangable. However, thinking about more complex data structures through an object-oriented lense will allow us to better understand how to write effective code.

We can think of an object as an entity which has two aspects: data and methods. The data (sometimes called properties) is what we would typically think of as the value of that object; what it is storing. Methods define what we can do with an object, essentially they are functions specific to an object (you will often hear function and method used interchangebly).

Next session we will explore the list object in Python.

A list consists of its data, or what is stored in the list.

PYTHON

# Here, we are storing 3, 67, 1, and 33 as the data inside the list object

sizes = [3,67,1,33]

# Reverse is a list method which reverses that list

sizes.reverse()

print(sizes)OUTPUT

[33, 1, 67, 3]Methods are unique to objects, and so methods with the same name may work differently on different objects.

In Python, many objects share method names. However, those methods may do different things.

We have already seen this implicitly looking at how operations like addition interact with different basic types. For instance, performing multiplication on a list:

OUTPUT

[33, 1, 67, 3, 33, 1, 67, 3, 33, 1, 67, 3]May not have the behavior you expect. Whenever our code isn’t doing

what we want, one of the first things to check is that the

type of our variables is what we expect.

You can create an object using a constructor.

All objects have constructors, which are special function that create

that object. Constructors are often called implicitly when we, for

instance, define a list using the [] notation or create a

Pandas dataframe object using read_csv.

However, objects also have explicit constructors which can be called

directly.

PYTHON

# The basic list constructor

new_sizes = list()

new_sizes.append(33)

new_sizes.append(1)

new_sizes.append(67)

new_sizes.append(3)

print(new_sizes)OUTPUT

[33, 1, 67, 3]Objects need to be explicitly copied.

We will continue to circle back to this point, but one imporant note about complex objects is that they need to be explicitly copied. This is due to them being mutable, which we will discuss more next session.

We can have multiple variables refer to the same object. This can result in us unknowningly changing an object we didn’t expect to.

For instance, two variables can refer to the same list:

PYTHON

# Here, we lose the original sizes list and now both variables are pointing to the same list

sizes = new_sizes

print(sizes)

print(new_sizes)

# Thus, if we change sizes:

sizes.append(100)

# We see that new_sizes has also changed

print(sizes)

print(new_sizes)OUTPUT

[33, 1, 67, 3]

[33, 1, 67, 3]

[33, 1, 67, 3, 100]

[33, 1, 67, 3, 100]In order to make a variable refer to a different copy of a list, we need to explicitly copy it. One way to do this is to use a copy constructor. Most objects in Python have a copy constructor which accepts another object of the same type and creates a copy of it.

PYTHON

# Calling the copy constructor

sizes = list(new_sizes)

print(sizes)

print(new_sizes)

# Now if we change sizes:

sizes.append(100)

# We see that new_sizes does NOT change

print(sizes)

print(new_sizes)OUTPUT

[33, 1, 67, 3, 100]

[33, 1, 67, 3, 100]

[33, 1, 67, 3, 100, 100]

[33, 1, 67, 3, 100]However, even copying an object can sometimes not be enough. Some objects are able to store other objects. If this is the case, the internal object might not change.

OUTPUT

[[33, 1, 67, 3, 100, 100], [33, 1, 67, 3, 100, 100], [33, 1, 67, 3, 100, 100], [33, 1, 67, 3, 100, 100]]PYTHON

# While the external list here is different, the internal lists are still the same

new_lol = list(list_of_lists)

print(new_lol)OUTPUT

[[33, 1, 67, 3, 100, 100], [33, 1, 67, 3, 100, 100], [33, 1, 67, 3, 100, 100], [33, 1, 67, 3, 100, 100]]OUTPUT

[[100, 3, 67, 1, 33], [100, 3, 67, 1, 33], [100, 3, 67, 1, 33], [100, 3, 67, 1, 33]]Overall, we need to be careful in Python when copying objects. This is one of the reasons why most libaries’ methods return new objects as opposed to altering existing objects, so as to avoid any confusion over different object versions. Additionally, we can perform a deep copy of an object, which is an object copy which copys and stored objects.

Challenge

Pandas is one of the most popular python libraries for manipulating data which we will be diving into next week. Take a look at the official documentation for Pandas and try to find how to create a deep copy of a dataframe. Is what you found a function or a method? You can find the documentation here

Dataframes in Pandas have a copy method found

here.

This method by default performs a deep copy as the deep

argument’s default value is True.

Content from Lists

Last updated on 2023-04-20 | Edit this page

Overview

Questions

- How can I store many values together?

- How do I access items stored in a list?

- How are variables assigned to lists different than variables assigned to values?

Objectives

- Explain what a list is.

- Create and index lists of simple values.

- Change the values of individual elements

- Append values to an existing list

- Reorder and slice list elements

- Create and manipulate nested lists

Key Points

-

[value1, value2, value3, ...]creates a list. - Lists can contain any Python object, including lists (i.e., list of lists).

- Lists are indexed and sliced with square brackets (e.g., list[0] and list[2:9]), in the same way as strings and arrays.

- Lists are mutable (i.e., their values can be changed in place).

- Strings are immutable (i.e., the characters in them cannot be changed).

Python lists

We create a list by putting values inside square brackets and separating the values with commas:

OUTPUT

odds are: [1, 3, 5, 7]The empty list

Similar to an empty string, we can create an empty list with a

len of 0 and no elements. We create an empty list as

empty_list = [] or empty_list = list(), and it

is useful when we want to build up a list element by element.

We can access elements of a list using indices – numbered positions of elements in the list. These positions are numbered starting at 0, so the first element has an index of 0.

PYTHON

print('first element:', odds[0])

print('last element:', odds[3])

print('"-1" element:', odds[-1])OUTPUT

first element: 1

last element: 7

"-1" element: 7Yes, we can use negative numbers as indices in Python. When we do so,

the index -1 gives us the last element in the list,

-2 the second to last, and so on. Because of this,

odds[3] and odds[-1] point to the same element

here.

There is one important difference between lists and strings: we can change the values in a list, but we cannot change individual characters in a string. For example:

PYTHON

names = ['Curie', 'Darwing', 'Turing'] # typo in Darwin's name

print('names is originally:', names)

names[1] = 'Darwin' # correct the name

print('final value of names:', names)OUTPUT

names is originally: ['Curie', 'Darwing', 'Turing']

final value of names: ['Curie', 'Darwin', 'Turing']works, but:

ERROR

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-8-220df48aeb2e> in <module>()

1 name = 'Darwin'

----> 2 name[0] = 'd'

TypeError: 'str' object does not support item assignmentdoes not. This is because a list is mutable while a string is immutable.

Mutable and immutable

Data which can be modified in place is called mutable, while data which cannot be modified is called immutable. Strings and numbers are immutable. This does not mean that variables with string or number values are constants, but when we want to change the value of a string or number variable, we can only replace the old value with a completely new value.

Lists and arrays, on the other hand, are mutable: we can modify them after they have been created. We can change individual elements, append new elements, or reorder the whole list. For some operations, like sorting, we can choose whether to use a function that modifies the data in-place or a function that returns a modified copy and leaves the original unchanged.

Be careful when modifying data in-place. If two variables refer to the same list, and you modify the list value, it will change for both variables!

PYTHON

mild_salsa = ['peppers', 'onions', 'cilantro', 'tomatoes']

hot_salsa = mild_salsa # <-- mild_salsa and hot_salsa point to the *same* list data in memory

hot_salsa[0] = 'hot peppers'

print('Ingredients in mild salsa:', mild_salsa)

print('Ingredients in hot salsa:', hot_salsa)OUTPUT

Ingredients in mild salsa: ['hot peppers', 'onions', 'cilantro', 'tomatoes']

Ingredients in hot salsa: ['hot peppers', 'onions', 'cilantro', 'tomatoes']Let’s go to this link to visualize this code.

If you want variables with mutable values to be independent, you must make a copy of the value when you assign it.

PYTHON

mild_salsa = ['peppers', 'onions', 'cilantro', 'tomatoes']

hot_salsa = list(mild_salsa) # <-- makes a *copy* of the list

hot_salsa[0] = 'hot peppers'

print('Ingredients in mild salsa:', mild_salsa)

print('Ingredients in hot salsa:', hot_salsa)OUTPUT

Ingredients in mild salsa: ['peppers', 'onions', 'cilantro', 'tomatoes']

Ingredients in hot salsa: ['hot peppers', 'onions', 'cilantro', 'tomatoes']Because of pitfalls like this, code which modifies data in place can be more difficult to understand. However, it is often far more efficient to modify a large data structure in place than to create a modified copy for every small change. You should consider both of these aspects when writing your code.

Nested Lists

Since a list can contain any Python variables, it can even contain other lists.

For example, you could represent the products on the shelves of a

small grocery shop as a nested list called veg:

veg is represented as a shelf full

of produce. There are three rows of vegetables on the shelf, and each

row contains three baskets of vegetables. We can label each basket

according to the type of vegetable it contains, so the top row contains

(from left to right) lettuce, lettuce, and peppers.To store the contents of the shelf in a nested list, you write it this way:

PYTHON

veg = [['lettuce', 'lettuce', 'peppers', 'zucchini'],

['lettuce', 'lettuce', 'peppers', 'zucchini'],

['lettuce', 'cilantro', 'peppers', 'zucchini']]Here are some visual examples of how indexing a list of lists

veg works. First, you can reference each row on the shelf

as a separate list. For example, veg[2] represents the

bottom row, which is a list of the baskets in that row.

![veg is now shown as a list of three rows, with veg[0] representing the top row of three baskets, veg[1] representing the second row, and veg[2] representing the bottom row.](fig/05_groceries_veg0.png)

veg is now shown as a list of three

rows, with veg[0] representing the top row of three

baskets, veg[1] representing the second row, and

veg[2] representing the bottom row.Index operations using the image would work like this:

OUTPUT

['lettuce', 'cilantro', 'peppers', 'zucchini']OUTPUT

['lettuce', 'lettuce', 'peppers', 'zucchini']To reference a specific basket on a specific shelf, you use two

indexes. The first index represents the row (from top to bottom) and the

second index represents the specific basket (from left to right). ![veg is now shown as a two-dimensional grid, with each basket labeled according to its index in the nested list. The first index is the row number and the second index is the basket number, so veg[1][3] represents the basket on the far right side of the second row (basket 4 on row 2): zucchini](fig/05_groceries_veg00.png)

OUTPUT

'lettuce'OUTPUT

'peppers'Manipulating Lists

There are many ways to change the contents of lists besides assigning new values to individual elements:

OUTPUT

odds after adding a value: [1, 3, 5, 7, 11]PYTHON

removed_element = odds.pop(0)

print('odds after removing the first element:', odds)

print('removed_element:', removed_element)OUTPUT

odds after removing the first element: [3, 5, 7, 11]

removed_element: 1OUTPUT

odds after reversing: [11, 7, 5, 3]While modifying in place, it is useful to remember that Python treats lists in a slightly counter-intuitive way.

As we saw earlier, when we modified the mild_salsa list

item in-place, if we make a list, (attempt to) copy it and then modify

this list, we can cause all sorts of trouble. This also applies to

modifying the list using the above functions:

PYTHON

odds = [3, 5, 7]

primes = odds

primes.append(2)

print('primes:', primes)

print('odds:', odds)OUTPUT

primes: [3, 5, 7, 2]

odds: [3, 5, 7, 2]This is because Python stores a list in memory, and then can use

multiple names to refer to the same list. If all we want to do is copy a

(simple) list, we can again use the list function, so we do

not modify a list we did not mean to:

PYTHON

odds = [3, 5, 7]

primes = list(odds)

primes.append(2)

print('primes:', primes)

print('odds:', odds)OUTPUT

primes: [3, 5, 7, 2]

odds: [3, 5, 7]Subsets of lists can be accessed by slicing, similar to how we accessed ranges of positions in a strings.

PYTHON

binomial_name = 'Drosophila melanogaster'

genus = binomial_name[0:10]

print('genus:', genus)

species = binomial_name[11:23]

print('species:', species)

chromosomes = ['X', 'Y', '2', '3', '4']

autosomes = chromosomes[2:5]

print('autosomes:', autosomes)

last = chromosomes[-1]

print('last:', last)OUTPUT

genus: Drosophila

species: melanogaster

autosomes: ['2', '3', '4']

last: 4Slicing From the End

Use slicing to access only the last four characters of a string or entries of a list.

PYTHON

string_for_slicing = 'Observation date: 02-Feb-2013'

list_for_slicing = [['fluorine', 'F'],

['chlorine', 'Cl'],

['bromine', 'Br'],

['iodine', 'I'],

['astatine', 'At']]Desired output:

OUTPUT

'2013'

[['chlorine', 'Cl'], ['bromine', 'Br'], ['iodine', 'I'], ['astatine', 'At']]Would your solution work regardless of whether you knew beforehand the length of the string or list (e.g. if you wanted to apply the solution to a set of lists of different lengths)? If not, try to change your approach to make it more robust.

Hint: Remember that indices can be negative as well as positive

Non-Continuous Slices

So far we’ve seen how to use slicing to take single blocks of successive entries from a sequence. But what if we want to take a subset of entries that aren’t next to each other in the sequence?

You can achieve this by providing a third argument to the range within the brackets, called the step size. The example below shows how you can take every third entry in a list:

PYTHON

primes = [2, 3, 5, 7, 11, 13, 17, 19, 23, 29, 31, 37]

subset = primes[0:12:3]

print('subset', subset)OUTPUT

subset [2, 7, 17, 29]Notice that the slice taken begins with the first entry in the range, followed by entries taken at equally-spaced intervals (the steps) thereafter. If you wanted to begin the subset with the third entry, you would need to specify that as the starting point of the sliced range:

PYTHON

primes = [2, 3, 5, 7, 11, 13, 17, 19, 23, 29, 31, 37]

subset = primes[2:12:3]

print('subset', subset)OUTPUT

subset [5, 13, 23, 37]Use the step size argument to create a new string that contains only every other character in the string “In an octopus’s garden in the shade”. Start with creating a variable to hold the string:

What slice of beatles will produce the following output

(i.e., the first character, third character, and every other character

through the end of the string)?

OUTPUT

I notpssgre ntesaeIf you want to take a slice from the beginning of a sequence, you can omit the first index in the range:

PYTHON

date = 'Monday 4 January 2016'

day = date[0:6]

print('Using 0 to begin range:', day)

day = date[:6]

print('Or omit the beginning index to slice from 0:', day)OUTPUT

Using 0 to begin range: Monday

Or omit the beginning index to slice from 0: MondayAnd similarly, you can omit the ending index in the range to take a slice to the very end of the sequence:

PYTHON

months = ['jan', 'feb', 'mar', 'apr', 'may', 'jun', 'jul', 'aug', 'sep', 'oct', 'nov', 'dec']

sond = months[8:12]

print('With known last position:', sond)

sond = months[8:len(months)]

print('Using len() to get last entry:', sond)

sond = months[8:]

print('Or omit the final index to go to the end of the list:', sond)OUTPUT

With known last position: ['sep', 'oct', 'nov', 'dec']

Using len() to get last entry: ['sep', 'oct', 'nov', 'dec']

Or omit the final index to go to the end of the list: ['sep', 'oct', 'nov', 'dec']Going past len

Python does not consider it an error to go past the end of a list when slicing. Python will juse slice until the end of the list:

OUTPUT

['jan', 'feb', 'mar', 'apr', 'may', 'jun', 'jul', 'aug', 'sep', 'oct', 'nov', 'dec']However, trying to get a single item from a list at an index greater than its length will result in an error:

ERROR

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

Input In [5], in <cell line: 1>()

----> 1 months[30]

IndexError: list index out of rangeOverloading

+ usually means addition, but when used on strings or

lists, it means “concatenate”. Given that, what do you think the

multiplication operator * does on lists? In particular,

what will be the output of the following code?

[2, 4, 6, 8, 10, 2, 4, 6, 8, 10][4, 8, 12, 16, 20][[2, 4, 6, 8, 10],[2, 4, 6, 8, 10]][2, 4, 6, 8, 10, 4, 8, 12, 16, 20]

The technical term for this is operator overloading: a

single operator, like + or *, can do different

things depending on what it’s applied to.

Content from For Loops

Last updated on 2023-04-20 | Edit this page

Overview

Questions

- How can I make a program do many things?

- How can I do something for each thing in a list?

Objectives

- Explain what for loops are normally used for.

- Trace the execution of a simple (unnested) loop and correctly state the values of variables in each iteration.

- Write for loops that use the Accumulator pattern to aggregate values.

Key Points

- A for loop executes commands once for each value in a collection.

- A

forloop is made up of a collection, a loop variable, and a body. - The first line of the

forloop must end with a colon, and the body must be indented. - Indentation is always meaningful in Python.

- Loop variables can be called anything (but it is strongly advised to have a meaningful name to the looping variable).

- The body of a loop can contain many statements.

- Use

rangeto iterate over a sequence of numbers.

A for loop executes commands once for each value in a collection.

- Doing calculations on the values in a list one by one is as painful

as working with

pressure_001,pressure_002, etc. - A for loop tells Python to execute some statements once for each value in a list, a character string, or some other collection.

- “for each thing in this group, do these operations”

- This

forloop is equivalent to:

- And the

forloop’s output is:

OUTPUT

2

3

5A for loop is made up of a collection, a loop variable,

and a body.

- The collection,

[2, 3, 5], is what the loop is being run on. - The body,

print(number), specifies what to do for each value in the collection. - The loop variable,

number, is what changes for each iteration of the loop.- The “current thing”.

The first line of the for loop must end with a colon,

and the body must be indented.

- The colon at the end of the first line signals the start of a block of statements.

- Python uses indentation rather than

{}orbegin/endto show nesting.- Any consistent indentation is legal, but almost everyone uses four spaces.

OUTPUT

IndentationError: expected an indented block- Indentation is always meaningful in Python.

ERROR

File "<ipython-input-7-f65f2962bf9c>", line 2

lastName = "Smith"

^

IndentationError: unexpected indent- This error can be fixed by removing the extra spaces at the beginning of the second line.

Loop variables can be called anything.

- As with all variables, loop variables are:

- Created on demand.

- Meaningless: their names can be anything at all.

The body of a loop can contain many statements.

- But no loop should be more than a few lines long.

- Hard for human beings to keep larger chunks of code in mind.

OUTPUT

2 4 8

3 9 27

5 25 125Use range to iterate over a sequence of numbers.

- The built-in function

rangeproduces a sequence of numbers.- Not a list: the numbers are produced on demand to make looping over large ranges more efficient.

-

range(N)is the numbers 0..N-1- Exactly the legal indices of a list or character string of length N

OUTPUT

a range is not a list: range(0, 3)

0

1

2The Accumulator pattern turns many values into one.

- A common pattern in programs is to:

- Initialize an accumulator variable to zero, the empty string, or the empty list.

- Update the variable with values from a collection.

PYTHON

# Sum the first 5 integers.

my_sum = 0 # Line 1

for number in range(5): # Line 2

my_sum = my_sum + (number + 1) # Line 3

print(my_sum) # Line 4OUTPUT

15- Read

total = total + (number + 1)as:- Add 1 to the current value of the loop variable

number. - Add that to the current value of the accumulator variable

total. - Assign that to

total, replacing the current value.

- Add 1 to the current value of the loop variable

- We have to add

number + 1becauserangeproduces 0..9, not 1..10.- You could also have used

numberandrange(11).

- You could also have used

We can trace the program output by looking at which line of code is being executed and what each variable’s value is at each line:

| Line No | Variables |

|---|---|

| 1 | my_sum = 0 |

| 2 | my_sum = 0 number = 0 |

| 3 | my_sum = 1 number = 0 |

| 2 | my_sum = 1 number = 1 |

| 3 | my_sum = 3 number = 1 |

| 2 | my_sum = 3 number = 2 |

| 3 | my_sum = 6 number = 2 |

| 2 | my_sum = 6 number = 3 |

| 3 | my_sum = 10 number = 3 |

| 2 | my_sum = 10 number = 4 |

| 3 | my_sum = 15 number = 4 |

| 4 | my_sum = 15 number = 4 |

Let’s double check our work by visualizing the code.

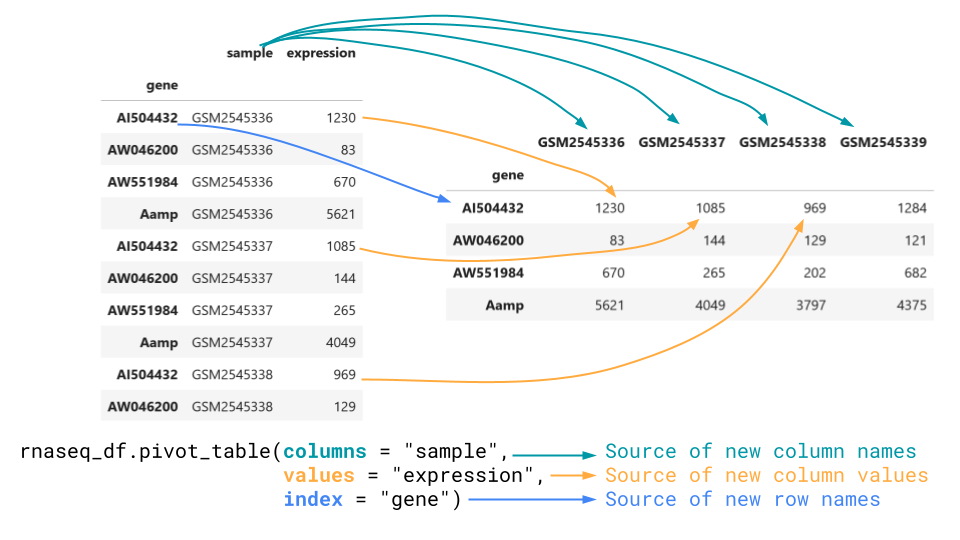

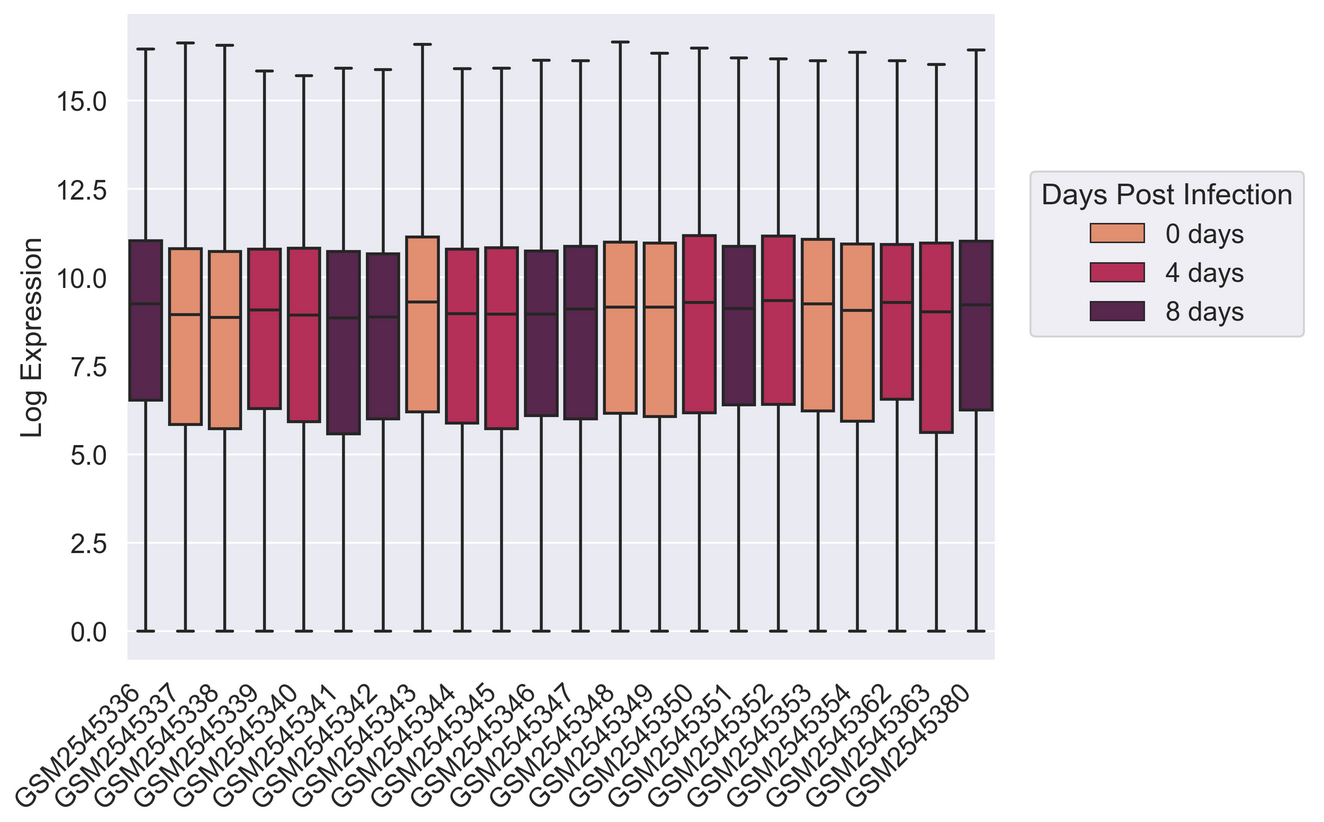

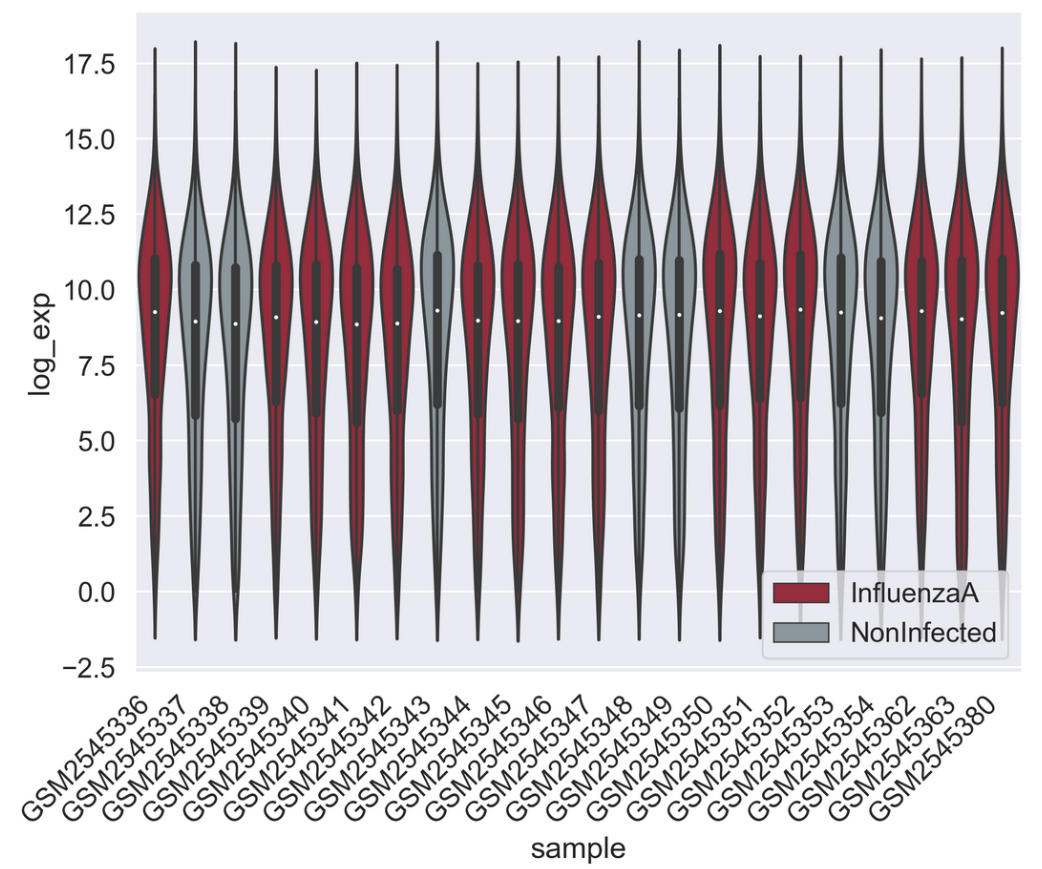

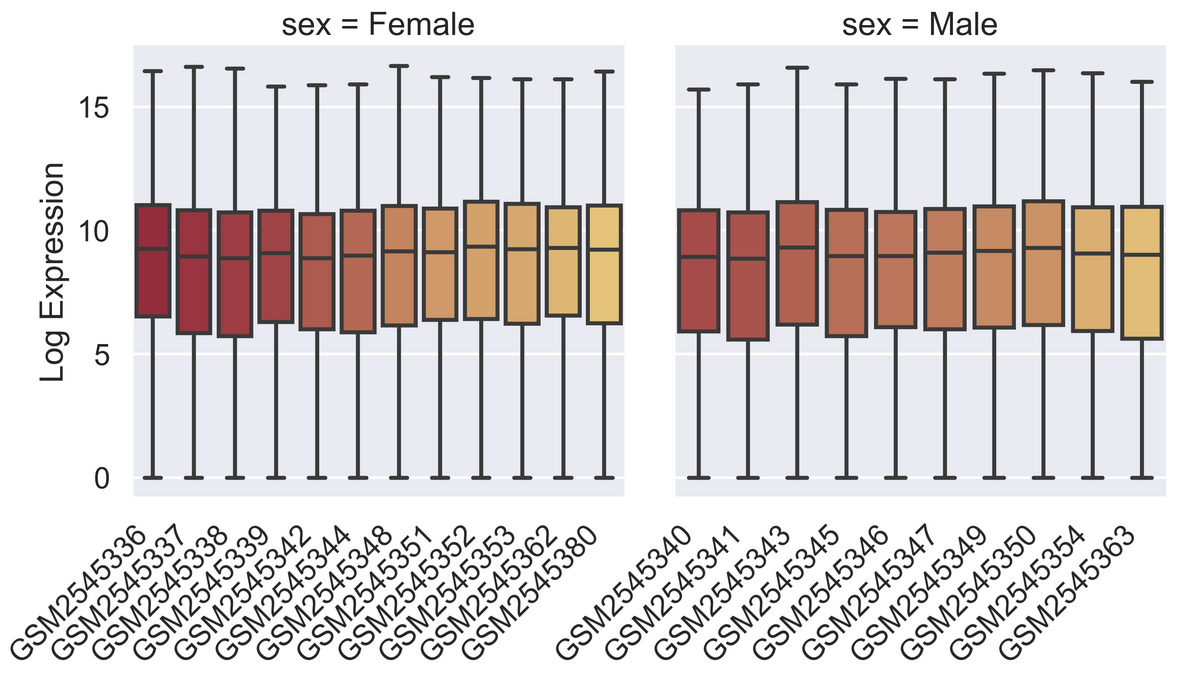

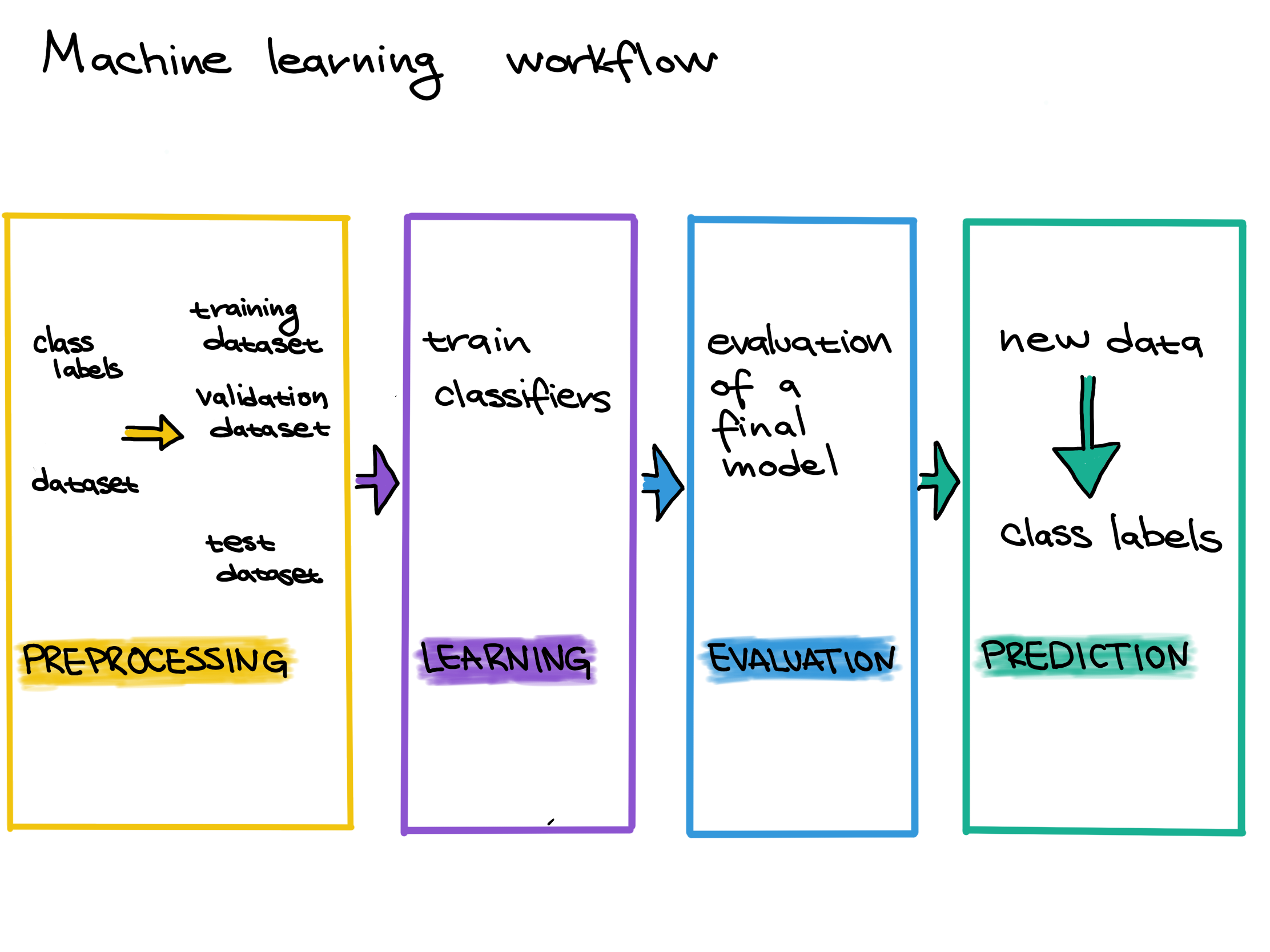

Classifying Errors